Get the latest updates and insights from Google I/O 2019.

Great things are happening with Google Search, and we were excited to share them at Google I/O 2019!

In this post we'll focus on best practices for making JavaScript web apps discoverable in Google Search, including:

- The new evergreen Googlebot

- Googlebot's pipeline for crawling, rendering and indexing

- Feature detection and error handling

- Rendering strategies

- Testing tools for your website in Google Search

- Common challenges and possible solutions

- Best practices for SEO in JavaScript web apps

Meet the evergreen Googlebot

This year we announced the much-awaited new evergreen Googlebot.

Googlebot now uses a modern Chromium engine to render websites for Google Search. On top of that, we will test newer versions of Chromium to keep Googlebot updated, usually within a few weeks of each stable Chrome release. This announcement is big news for web developers and SEOs because it marks the arrival of 1000+ new features—such as ES6+, IntersectionObserver, and Web Components v1—in Googlebot.

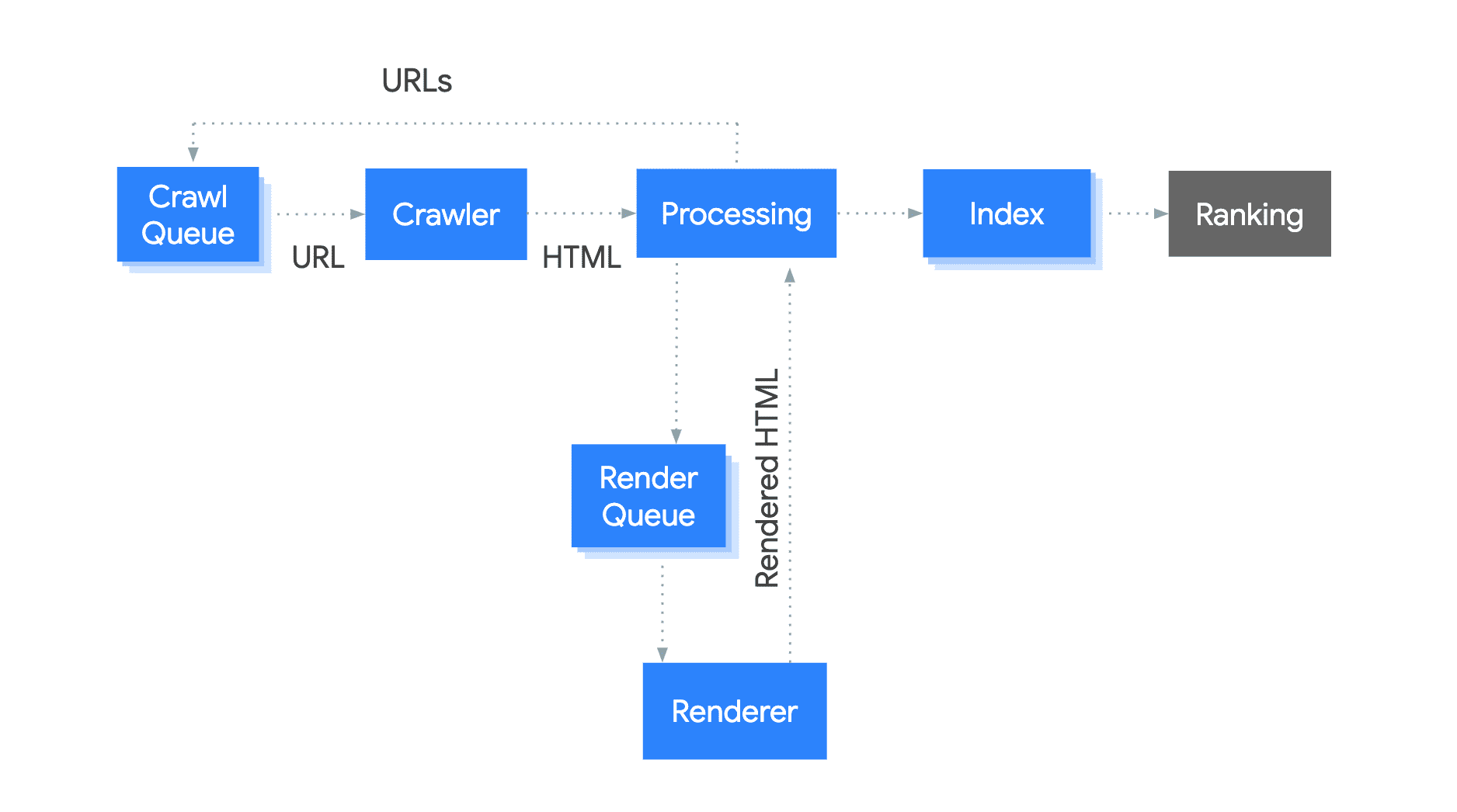

Learn how Googlebot works

Googlebot is a pipeline with several components. Let's take a look to understand how Googlebot indexes pages for Google Search.

The process works like this:

- Googlebot queues URLs for crawling.

- It then fetches the URLs with an HTTP request based on the crawl budget.

- Googlebot scans the HTML for links and queues the discovered links for crawling.

- Googlebot then queues the page for rendering.

- As soon as possible, a headless Chromium instance renders the page, which includes JavaScript execution.

- Googlebot uses the rendered HTML to index the page.

Your technical setup can influence the process of crawling, rendering, and indexing. For example, slow response times or server errors can impact the crawl budget. Another example would be requiring JavaScript to render the links can lead to a slower discovery of these links.

Use feature detection and handle errors

The evergreen Googlebot has lots of new features, but some features are still not supported. Relying on unsupported features or not handling errors properly can mean Googlebot can't render or index your content.

Let's look at an example:

<body>

<script>

navigator.geolocation.getCurrentPosition(function onSuccess(position) {

loadLocalContent(position);

});

</script>

</body>

This page might not show any content in some cases because the code doesn't handle when the user declines the permission or when getCurrentPosition call returns an error. Googlebot declines permission requests like this by default.

This is a better solution:

<body>

<script>

if (navigator.geolocation) {

// this browser supports the Geolocation API, request location!

navigator.geolocation.getCurrentPosition(

function onSuccess(position) {

// we successfully got the location, show local content

loadLocalContent(position);

}, function onError() {

// we failed to get the location, show fallback content

loadGlobalContent();

});

} else {

// this browser does not support the Geolocation API, show fallback content

loadGlobalContent();

}

</script>

</body>

If you have problems with getting your JavaScript site indexed, walk through our troubleshooting guide to find solutions.

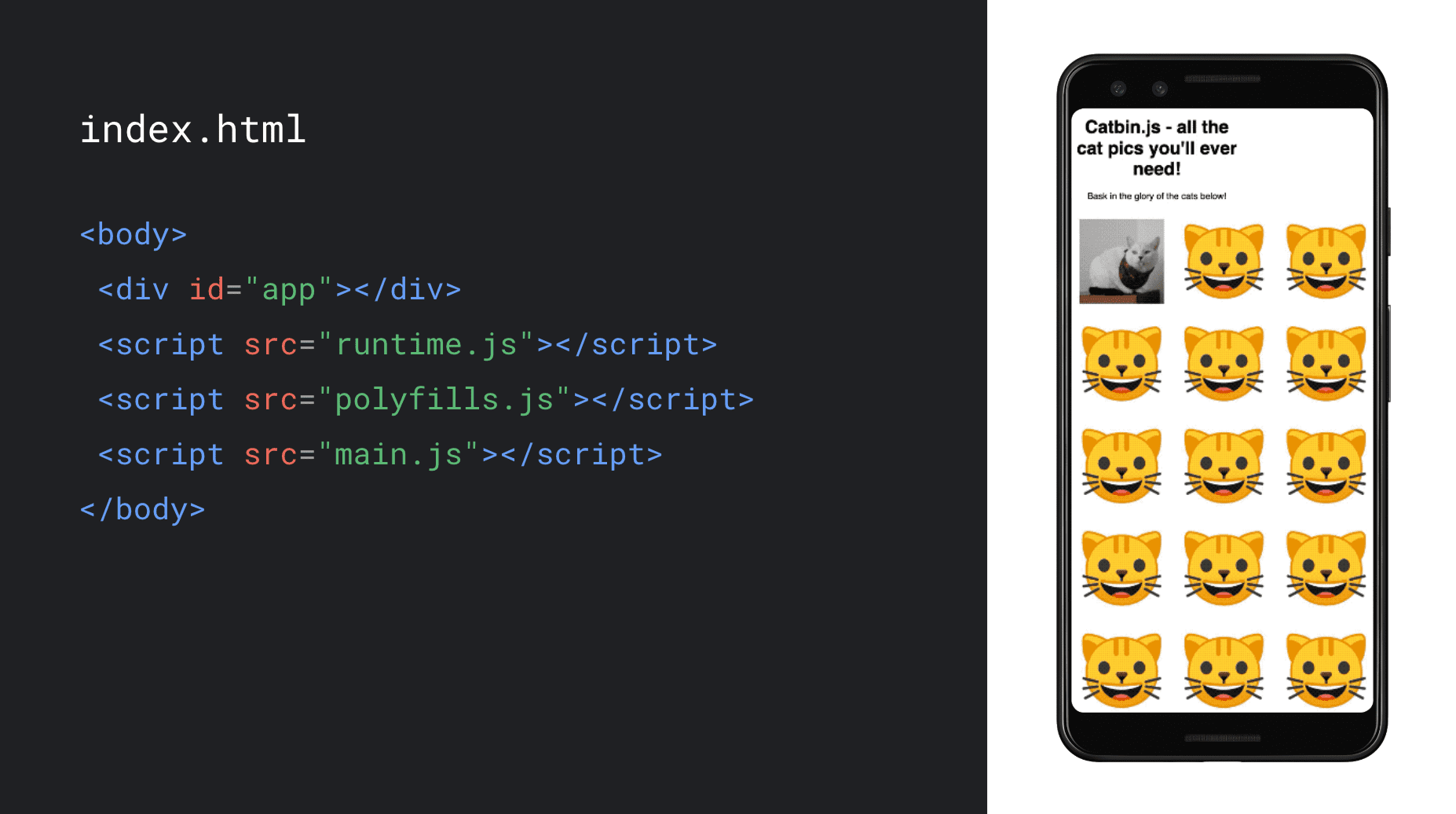

Choose the right rendering strategy for your web app

The default rendering strategy for single-page apps today is client-side rendering. The HTML loads the JavaScript, which then generates the content in the browser as it executes.

Let's look at a web app that shows a collection of cat images and uses JavaScript to render entirely in the browser.

If you're free to choose your rendering strategy, consider server-side rendering or pre-rendering. They execute JavaScript on the server to generate the initial HTML content, which can improve performance for both users and crawlers. These strategies allow the browser to start rendering HTML as it arrives over the network, making the page load faster. The rendering session at I/O or the blog post about rendering on the web shows how server-side rendering and hydration can improve the performance and user experience of web apps and provides more code examples for these strategies.

If you're looking for a workaround to help crawlers that don't execute JavaScript—or if you can't make changes to your frontend codebase—consider dynamic rendering, which you can try out in this codelab. Note, though, that you won't get the user experience or performance benefits that you would with server-side rendering or pre-rendering because dynamic rendering only serves static HTML to crawlers. That makes it a stop-gap rather than a long-term strategy.

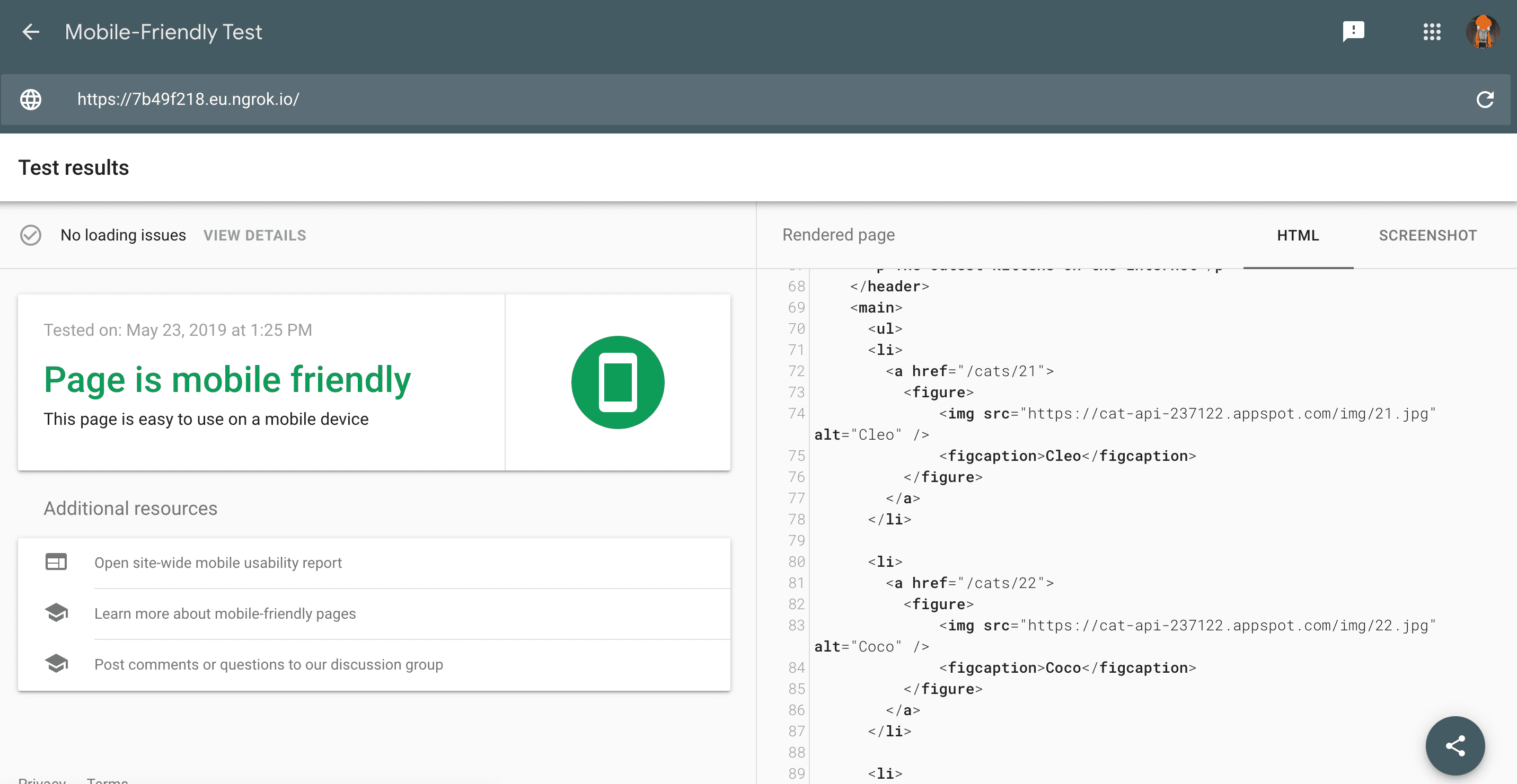

Test your pages

While most pages generally work fine with Googlebot, we recommend testing your pages regularly to make sure your content is available to Googlebot and there are no problems. There are several great tools to help you do that.

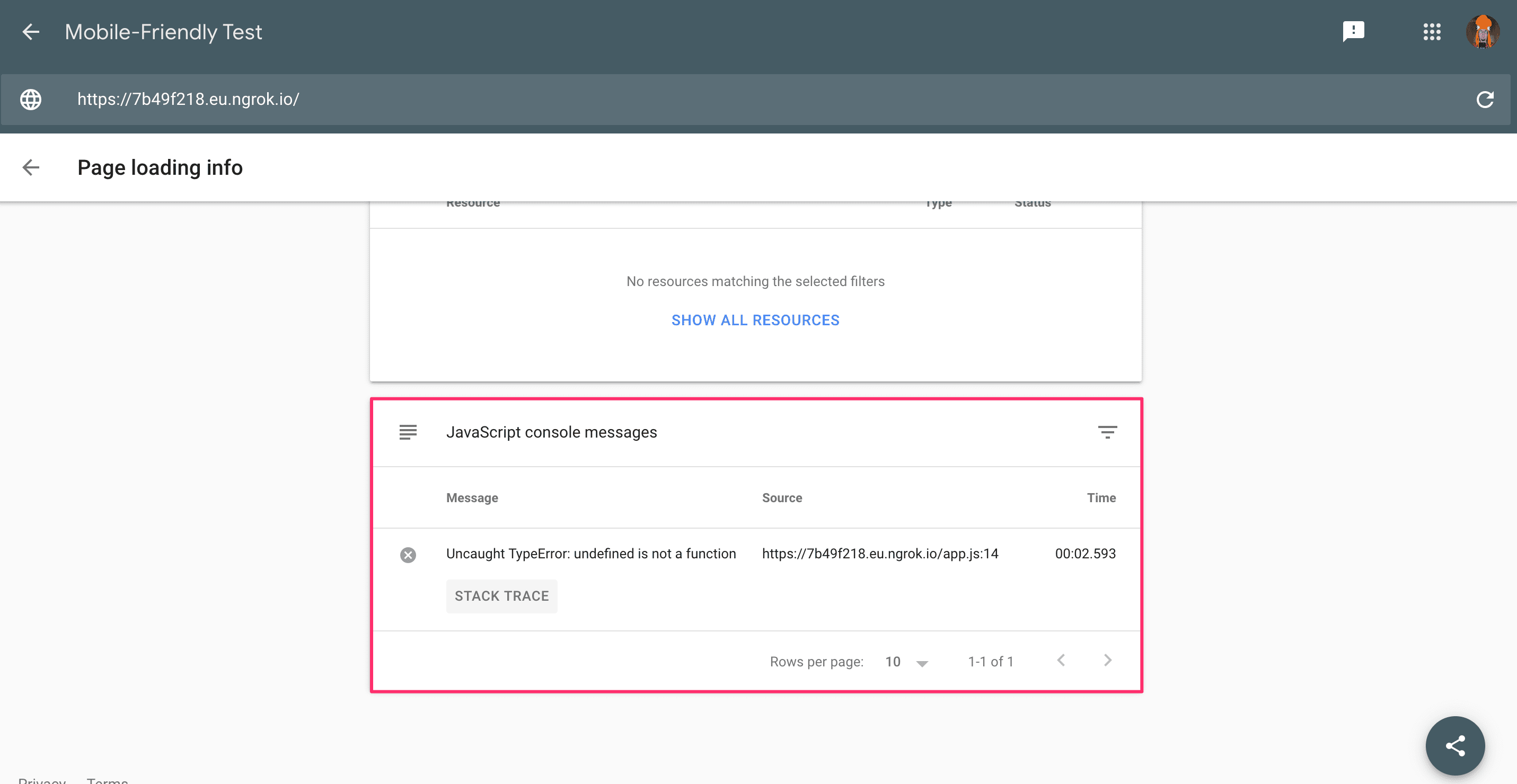

The easiest way to do a quick check of a page is the Mobile-Friendly Test. Besides showing you issues with mobile-friendliness, it also gives you a screenshot of the above-the-fold content and the rendered HTML as Googlebot sees it.

You can also find out if there are resource loading issues or JavaScript errors.

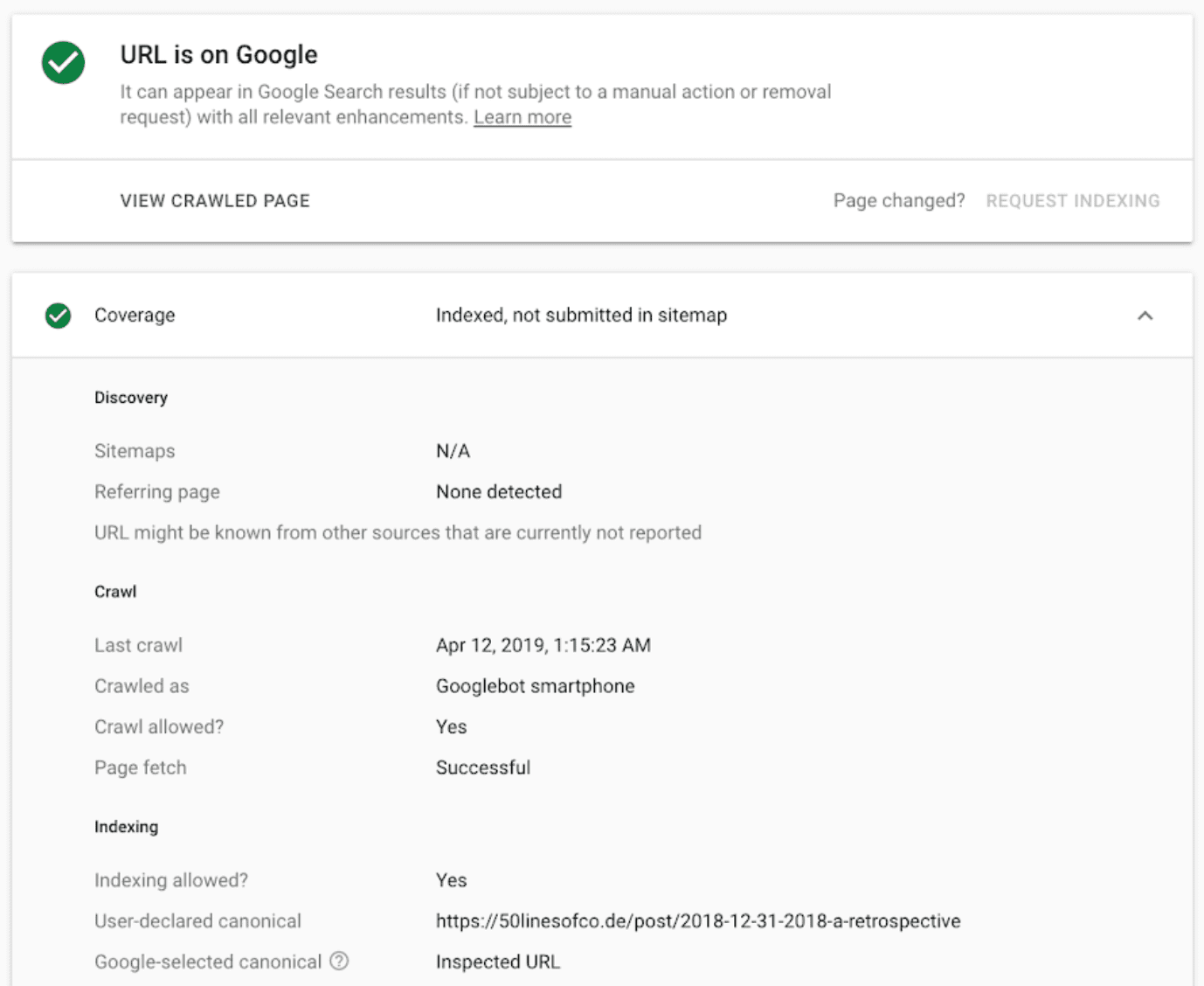

It's recommended to verify your domain in Google Search Console so you can use the URL inspection tool to find out more about the crawling and indexing state of a URL, receive messages when Search Console detects issues and find out more details of how your site performs in Google Search.

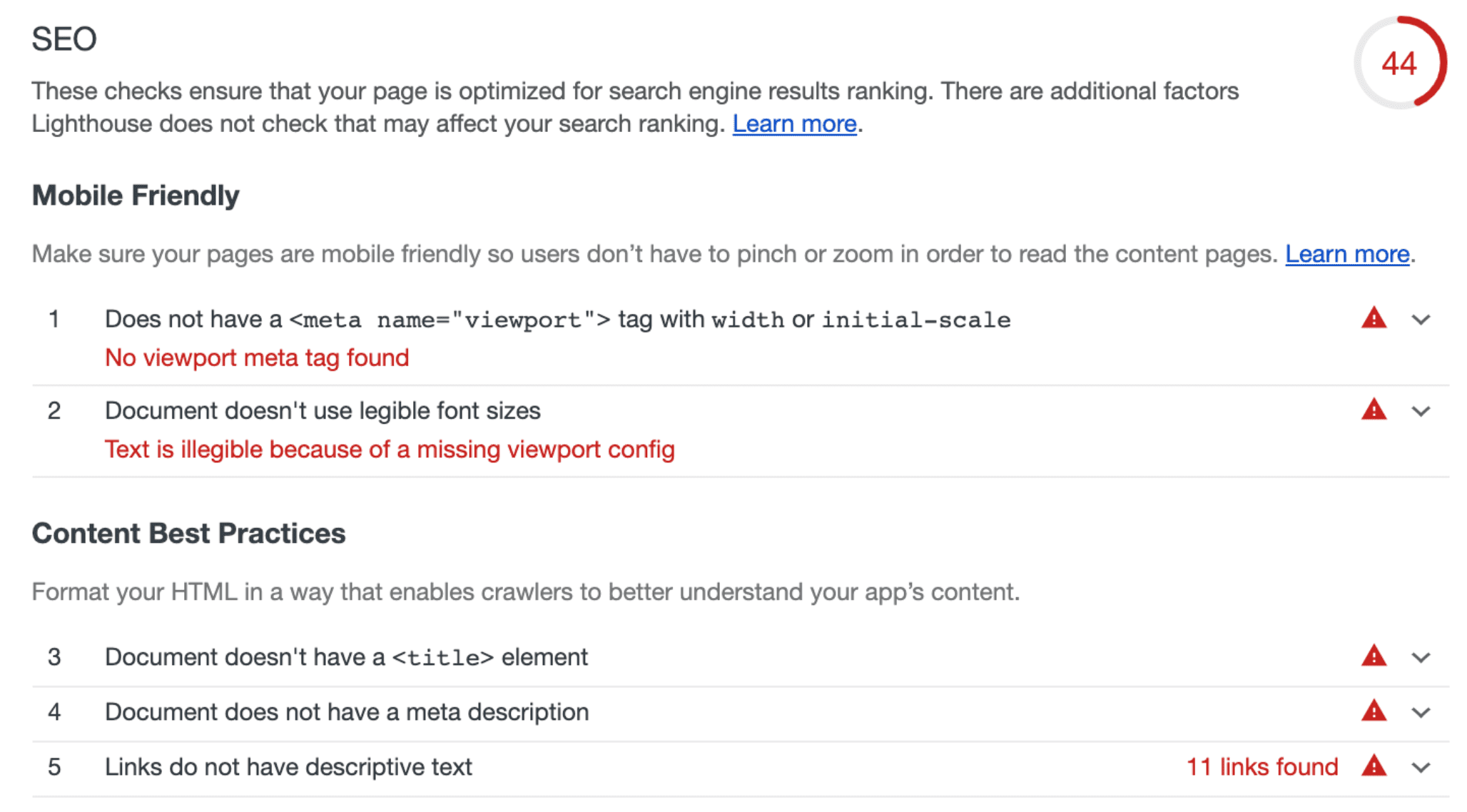

For general SEO tips and guidance, you can use the SEO audits in Lighthouse. To integrate SEO audits into your testing suite, use the Lighthouse CLI or the Lighthouse CI bot.

These tools help you identify, debug, and fix issues with pages in Google Search and should be part of your development routine.

Stay up to date and get in touch

To stay up to date with announcements and changes to Google Search, keep an eye on our Webmasters Blog, the Google Webmasters Youtube channel, and our Twitter account. Also check out our developer guide to Google Search and our JavaScript SEO video series to learn more about SEO and JavaScript.